Year 2020: Covid19 and Videocall. Videocalls and Covid19. Browsers, native apps, desktop apps.

One of the first thing that happened during the pandemic was to move the office meeting room into a virtual meeting room. Fair enough.

During these days, there is one big question I got asked all the time: how to build a videoconference application.

My Answer: It's all about UI.

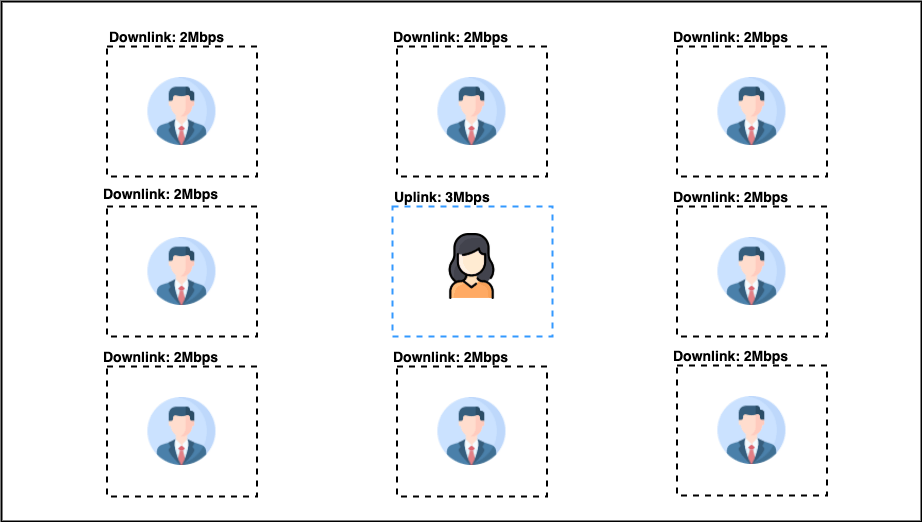

Room Layout

I guess you are very familiar with the above layout. Let me unroll the numbers: for a good 1280x720@30 fps stream, you need a downlink of 1.5-2Mbps. Based on the picture above, for 8 audio-video streams, you would need 16Mbps downlink.

| Subscribers | Bitrate per Subscriber | Total Downlink |

| 8 | 2Mbps | 16Mbps |

| Publishers | Bitrate per Subscriber | Total Uplink |

| 1 | 2Mbps | 2Mbps |

As an upper limit, a good practice is to display up to 16-20 audio-video streams and fallback to only audio beyond that limit. The reason being bandwidth and CPU consumption on the end-device.

Note: The above constraints are valid for desktop devices. For mobile devices, the same analysis is valid but with a lower number of concurrent audio-video streams. I would say up to 6-8 audio-video streams.

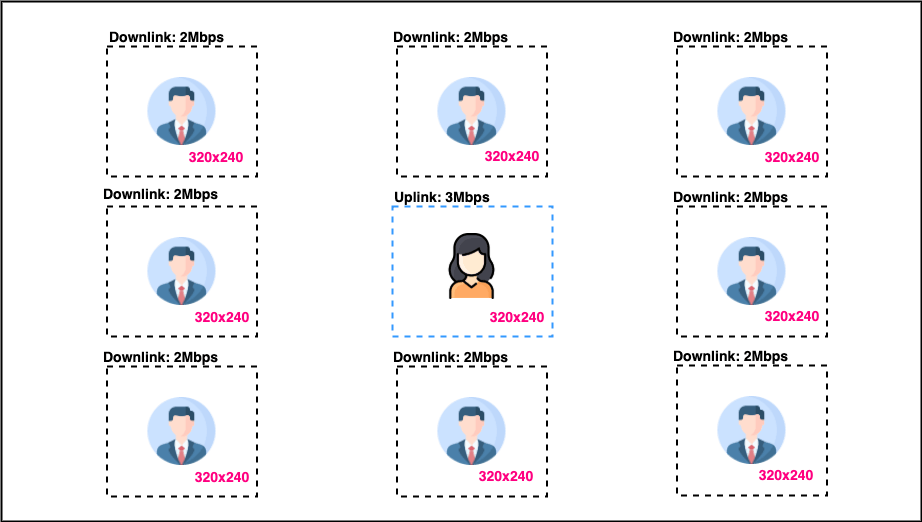

Video Resolution

The problem with the above layout is that your application is receiving the video stream in high quality but displaying them in small HTML elements. Specifically, the app is receiving 720p video streams and displaying them into 320x240 divs.

It's very likely that one or two streams will be the main hosts. The others are just participants that will speak for a limited amount of time. You want to focus on the main ones and get the best quality from them. Based on the UI of your application, the main streams will be the bigger ones displayed on the web page.

You can implement a logic that subscribes to the main streams in HD quality, and lower quality for the other ones.

Common video resolutions:

| Quality | Frame Rate | Total Downlink |

| 1280x720 (HD) | 30 | 2Mbps |

| 640x480 (VGA) | 30 | 500kbps |

| 320x240 (QVGA) | 30 | 150kbps |

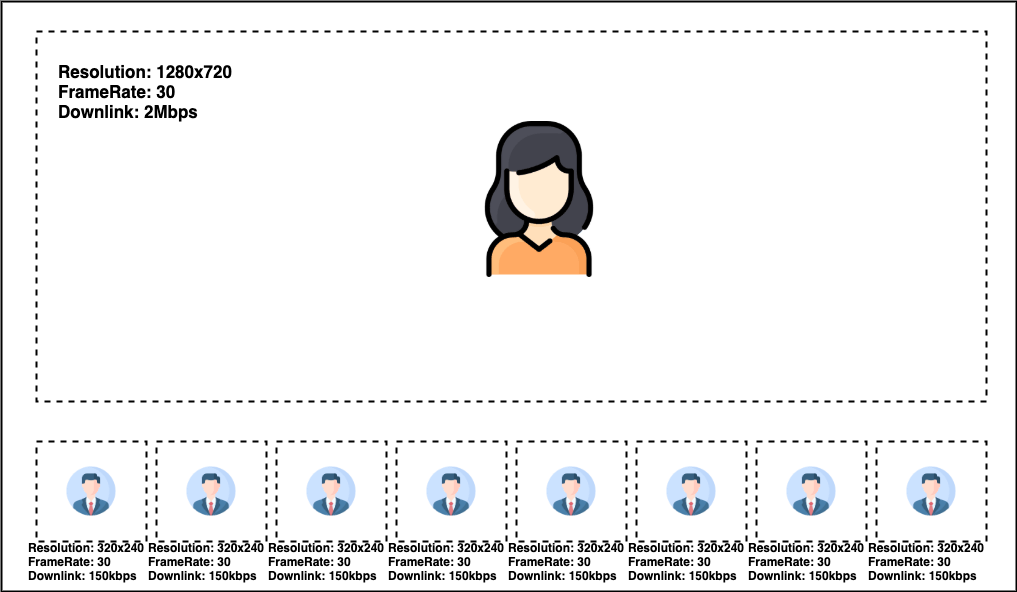

Better Layout:

The main stream is displayed in a larger HTML Element so it makes sense to get the max quality from it. On the other side, the streams on the bottom side are displayed in smaller HTML elements so the application doesn't need to get 720p resolution for them. Plus, user attention is on the main ones, so even getting a lower quality doesn't have an impact on the user experience. It's a win-win optimisation: user experience is not impacted and required bandwidth is lower than having each stream at a higher quality.

New Required Bandwidth:

| Subscribers | Bitrate per Subscriber | Total Downlink |

| 1 | 2Mbps | 2Mbps |

| 7 | 150kbps | 1.05Mbps |

We went from 16Mbps downlink required to 3.05 Mbps ✌️

Wrapping up

Doing video calls all day long is stressful, it's even more stressful if the application is poor implemented.

When implementing a video conference application, you need to focus on the UI. UI implementation will affect performance. The application doesn't need to change video streams position every few milliseconds in response to speaker' events or receive high quality for every single stream. Remember that not everyone will talk in a multiparty conference call. You can decide to mute them and lower their resolution.

If this story was useful, follow me on Twitter for more!